Projects

Neural Network-based Robotic Grasping via Curiosity-driven Reinforcement Learning (NeuralGrasp)

| Reinforcement Learning (RL) helps robots learn behaviors including complex manipulation and navigation tasks autonomously, purely from trial-and-error interactions with the environment and resulting in an optimal policy, which represents the desired behavior of the robot. However, exploring the environment to collect useful experience data is often difficult, especially for grasping tasks where high-dimensional visual data and multiple degrees of freedom are involved. The project proposes to address this problem in RL by looking into exploration strategies inspired by infant

cognition and sensorimotor development, particularly the use of curiosity as an intrinsic motivation.

PIs: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber Associates: Burhan Hafez Details: NeuralGrasp Project |

|

|

|

Bio-inspired Indoor Robot Navigation (BIONAV)

| Bio-inspired robot navigation is inspired by neuroscience

and navigation behaviors in animals and makes hypotheses about how

navigation skills are acquired and implemented in an animal's brain and

body. This project aims at testing some of these hypotheses, and to

develop the underlying mechanisms towards a biologically plausible

navigation system for a real robot platform, including spatial

representation, path planning and collision avoidance.

PIs: Prof. Dr. Stefan Wermter, Dr. Cornelius Weber Associates: Xiaomao Zhou Details: BIONAV Project |

|

|

|

Project A5 - Crossmodal learning in a neurobotic cortical and midbrain model

| The goals of project A5 are (1) to develop novel neurocomputational techniques to improve our

understanding of the superior colliculus (SC) and linked cortical areas; (2) to design and implement a self-organizing audiovisual model of these cortico-collicular networks in a physical robot; and (3) to compare both the model’s neural activity and the robot’s physical behaviour to the neural activity and physical behaviour of humans. The project will produce a novel computational architecture that can direct a robot’s gaze in a natural way to relevant stimuli. PIs: Prof. Dr. Stefan Wermter, Prof. Dr. Xun Liu Associates: Pablo Barros, German Parisi Details: A5 on www.crossmodal-learning.org www.crossmodal-learning.org |

|

|

|

Project C4 - Vision- and action-embodied language learning

|

Project C4 focuses on language learning by an embodied agent—one that experiences its world through action and multisensory perception—to understand how spatio-temporal information is processed for language. The goal is to build an embodied neural- and knowledge-based model that processes audio, visual, and proprioceptive information and learns language grounded in these crossmodal perceptions. As a learning architecture, an MTRNN will be used, which is a deep recurrent neural network designed both to model temporal dependencies at multiple timescales and to reflect the recurrent connectivity of the cortex in a conceptually less complex structure.

PIs: Prof. Dr. Zhiyuan Liu, Dr. Cornelius Weber, Prof. Dr. Stefan Wermter Details: www.crossmodal-learning.org C4 on www.crossmodal-learning.org |

|

|

|

SOCRATES - Social Cognitive Robotics in The European Society

|

To address the aim of successful multidisciplinary and intersectoral training, SOCRATES training is structured along two dimensions: thematic areas and disciplinary areas. The thematic perspective encapsulates functional challenges that apply to R&D. The following five thematic areas are identified as particularly important, and hence are given special attention in the project: Emotion, Intention, Adaptivity, Design, and Acceptance. The disciplinary perspective encapsulates the necessity of inter/multi- disciplinary and intersectoral solutions. Figure 1 illustrates how the six disciplinary areas are required to address research tasks in the five thematic areas. For instance, to successfully address an interface design task in WP5, theory and practice from all disciplinary areas typically must be utilised and combined.

SOCRATES organises research in five work packages WP2-6, corresponding to the thematic areas Emotion, Intention, Adaptivity, Design, and Acceptance.

PIs: Prof. Dr. Stefan Wermter Project Managers: Dr. Sven Magg, Dr. Cornelius Weber Associates: To be recruited. Details: www.socrates-project.eu and http://www.socrates-project.eu/research/emotion-wp2/ |

|

|

|

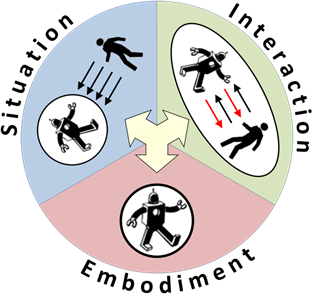

European Training Network: Safety Enables Cooperation in Uncertain Robotic Environments (SECURE)

|

The majority of existing robots in industry are pre-programmed robots working in safety zones, with visual or auditory warning signals and little concept of intelligent

safety awareness necessary for dynamic and unpredictable human or domestic environments. In the future, novel cognitive robotic companions will be developed, ranging from

service robots to humanoid robots, which should be able to learn from users and adapt to open dynamic contexts. The development of such robot companions will lead to new

challenges for human-robot cooperation and safety, going well beyond the current state of the art. Therefore, the SECURE project aims to train a new generation of researchers

on safe cognitive robot concepts for human work and living spaces on the most advanced humanoid robot platforms available in Europe. The fellows will be trained through an

innovative concept of project- based learning and constructivist learning in supervised peer networks where they will gain experience from an intersectoral programme involving

universities, research institutes, large and SME companies from public and private sectors. The training domain will integrate multidisciplinary concepts from the fields of

cognitive human-robot interaction, computer science and intelligent robotics where a new approach of integrating principles of embodiment, situation and interaction will be

pursued to address future challenges for safe human-robot environments. This project is funded by the European Union’s Horizon 2020 research and innovation programme Coordinator: Prof. Dr. S. Wermter Project Manager: Dr. S. Magg Associates: Chandrakant Bothe, Egor Lakomkin, Mohammad Zamani Details: www.secure-robots.eu |

|

|

|

Cross-modal Learning (CROSS)

|

CROSS is a project aiming to prepare and initiate research between the life sciences (neuroscience, psychology) and computer science in Hamburg and Beijing in order to set up

a collaborative research centre positioned interdisciplinarily between artificial intelligence, neuroscience and psychology while focusing on the topic of cross-modal learning.

Our long-term challenge is to understand the neural, cognitive and computational evidence of cross-modal learning and to use this understanding for (1) better analyzing human

performance and (2) building effective cross-modal computational systems.

Coordinators: Prof. Dr. S. Wermter, Prof. Dr. J. Zhang Collaborators: Prof. Dr. B. Röder, Prof. Dr. A. K. Engel Details: CROSS Project |

|

|

|

Echo State Networks for Developing Language Robots (EchoRob)

|

The project "EchoRob" (Echo State Networks for Developing Language Robots) aims at teaching different languages to robots using Recurrent Neural Networks (namely, Echo State Networks, ESN). The general aim has the two sub-goals to provide the ability to usual (non-programming) users to teach language to robots, and to give insights on how children acquire language.

Leading Investigator: Dr. X. Hinaut, Prof. Dr. S. Wermter Associates: J. Twiefel, Dr. S. Magg Details: EchoRob Project |

|

|

|

|

Cooperating Robots (CoRob)

|

Object detection an labeling help robots understand the environment in which they will perform exploration and navigation tasks.

The success of tasks such as cleaning an environment depends on the correct recognition of objects or places that should be cleaned.

The use of depth sensors can help robots to create representations of these objects. The challenging aspect is to make a robot understand that the same object will look different each time, with different perceived sizes, shapes, colours, etc.

The objective of the CoRob Project is to develop approaches that allow a team of robots to learn and generalize the knowledge about the objects acquired from its sensors. As the knowledge will be distributed in the team efficient ways to transfer this knowledge

between robots should also be implemented.

Leading Investigator: Prof. Dr. S. Wermter Associates: Dr. M. Borghetti, Dr. S. Magg, Dr. C. Weber Details: CoRob Project |

|

|

|

|

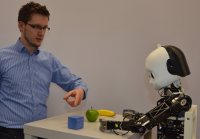

Gestures and Reference Instructions for Deep robot learning (GRID)

|

The communication task is one of the most important tasks within the area of Human-Robot Interaction (HRI).

Spoken language alone sometimes can lead to misunderstanding information or is not enough to express reference instructions, for example.

This project proposes to solve this problem using deep neural architectures, simulating multi-modal processing in the human brain.

Leading Investigator: Prof. Dr. S. Wermter Associates: P. Barros, Dr. S. Magg, Dr. C. Weber Details: Grid Project |

|

|

|

|

Teaching With Interactive Reinforcement Learning (TWIRL)

|

Reinforcement Learning has been a very useful approach, but often works slowly, because of large-scale

exploration. A variant of RL, that tries to improve speed of convergence, and that has been rarely used until now is Interactive Reinforcement

Learning (IRL), that is, RL is supported by a human trainer who gives some directions on how to tackle the problem.

Leading Investigator: Prof. Dr. S. Wermter Associates: F. Cruz, Dr. S. Magg, Dr. C. Weber Details: TWIRL Project |

|

|

|

|

Cross-modal Interaction in Natural and Artificial Cognitive Systems (CINACS)

|

CINACS is an international graduate colleg that investigates the principles of cross-modal interactions in natural and cognitive systems to implement them in artificial systems. Research will primarily consider the three sensory systems vision, hearing and haptics. This project, accomplished by the University of Hamburg and the University of Tsinghua, Beijing, is funded by the DFG and the Chinese Ministry of Education.

Spokesperson CINACS: Prof. Dr. J. Zhang Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber Associates: J. Bauer, J. D. Chacón, J. Kleesiek Details: CINACS Project |

|

|

|

Cognitive Assistive Systems (CASY)

|

The programme “Cognitive Assistive Systems (CASY)” will contribute to the focus on the next generation of human-centred systems for human-computer and human-robot collaboration. A central need of these systems is a high level of robustness and increased adaptivity to be able to act more natural under uncertain conditions. To address this need, research will focus on cognitively motivated multi-modal integration and human-robot interaction.

Spokesperson CASY: Prof. Dr. S. Wermter Leading Investigator: Prof. Dr. S. Wermter, Prof. Dr. J. Zhang, Prof. Dr. C. Habel, Prof. Dr.-Ing. W. Menzel Details: CASY Project |

|

|

|

|

Neuro-inspired Human-Robot Interaction

|

The aim of the research of the Knowledge Technology Group is to contribute to fundamental research in offering functional models for testing neuro-cognitive hypotheses about aspects of human communication, and in providing efficient bio-inspired methods to produce robust controllers for a communicative robot that successfully engages in human-robot interaction.

Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber Associates: S. Heinrich, D. Jirak, S. Magg Details: HRI Project |

|

|

|

|

Robotics for Development of Cognition (RobotDoC)

|

The RobotDoC Collegium is a multi-national doctoral training network for the interdisciplinary training on developmental cognitive robotics. The RobotDoc Fellows will develop advanced expertise of domain-specific robotics research skills and of complementary transferrable skills for careers in academia and industry. They will acquire hands-on experience through experiments with the open-source humanoid robot iCub, complemented by other existing robots available in the network's laboratories.

Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber Associates: N. Navarro, J. Zhong Details: RobotDoC Project |

|

|

|

Knowledgeable SErvice Robots for Aging (KSERA)

|

KSERA investigates the integration of assistive home technology

and service robotics to support older users in a domestic

environment. The KSERA system helps older people, especially

those with COPD (a lung disease), with daily activities and care needs and

provides the means for effective self-management. The main aim is to design a pleasant, easy-to-use and proactive socially assistive robot (SAR) that uses context information obtained from sensors in the older person's home to provide useful information and timely support at the right place. Leading Investigator: Prof. Dr. S. Wermter, Dr. C. Weber Associates: N. Meins, W. Yan Details: KSERA Project |

|

|

|

What it Means to Communicate (NESTCOM)

|

What does it mean to communicate?

Many projects have explored verbal and visual communication in humans as well as motor actions. They have explored a wide range of topics, including learning by imitation, the neural origins of languages, and the connections between verbal and non-verbal communication. The EU project NESTCOM is setting out to analyse these results to contribute to the understanding of the characteristics of human communication, focusing specifically on their relationship to computational neural networks and the role of mirror neurons in multimodal communications.

Leading Investigator: Prof. Dr. S. Wermter Associates: Dr. M. Knowles, M. Page Details: NESTCOM Project |

|

|

|

Midbrain Computational and Robotic Auditory Model for focused hearing (MiCRAM)

|

This research is a collaborative interdisciplinary EPSRC project to be performed between the University of Newcastle, the University of Hamburg and the University of Sunderland. The overall aim is to study sound processing in the mammalian brain and to build a biomimetic robot to validate and test the neuroscience models for focused hearing. We collaboratively develop a biologically plausible computational model of auditory processing at the level of the inferior colliculus (IC). This approach will potentially clarify the roles of the multiple spectral and temporal representations that are present at the level of the IC and investigate how representations of sounds interact withauditory processing at that level to focus attention and select sound sources for robot models of focused hearing. Leading Investigator: Prof. Dr. S. Wermter, Dr. H. Erwin Associates: Dr. J. Liu, Dr. M. Elshaw Details: MiCRAM Project |

|

|

|

Biomimetic Multimodal Learning in a Mirror Neuron-based Robot (MirrorBot)

|

This project develops and studies emerging embodied representation based on mirror neurons. New techniques including cell assemblies, associative neural networks, Hebbian-type learning associate visual, auditory and motor concepts. The basis of the research is an examination of the emergence of representations of actions, perceptions, conceptions, and language in a MirrorBot, a biologically inspired neural robot equipped with polymodal associative memory.

Details: MirrorBot Project |