Description

OMG Emotion Dataset

The competition is based on the One-Minute Gradual-Emotional Behavior dataset (OMG-Emotion dataset), which was collected and annotated exclusively for this competition.

This dataset is composed of Youtube videos which are around a minute in length and are annotated taking into consideration a continuous emotional behavior.

The videos were selected using a crawler technique that uses specific keywords based on long-term emotional behaviors such as "monologues", "auditions",

"dialogues" and "emotional scenes".

After the videos were selected, we created an algorithm to identify if the video has at least two different modalities which contribute for

the emotional categorization: facial expressions, language context, and a reasonably noiseless environment. We selected a total of 420 videos,

totaling around 10 hours of data. Here are examples of two of the videos in our dataset:

Samples from the One-Minute Gradual-Emotional Behavior dataset (OMG-Emotion dataset). For each video, audio, video and text modalities are provided.

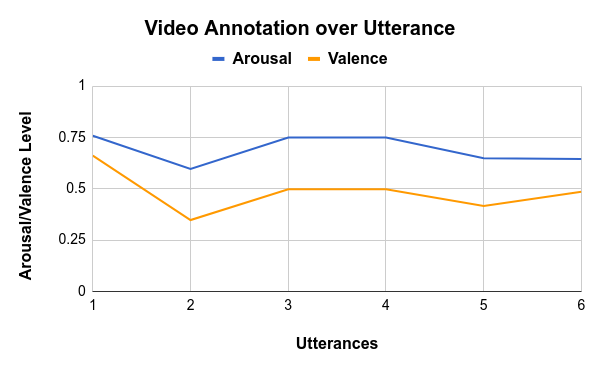

We used the Amazon Mechanical Turk platform to create utterance-level annotation for each video, exemplified in the Figure below. We assure that for each video have a total of 5 different annotations. To make sure that the contextual information was taken into consideration, the annotator watched the whole video in a sequence and was asked, after each utterance, to annotate the arousal and valence of what was displayed. We provide a gold standard for the annotations. This results in trustworthy labels that are truly representative of the subjective annotations from each of the annotators, providing an objective metric for evaluation. That means that for each utterance we have one value of arousal and valence. We then calculate the Concordance Correlation Coefficient (CCC) for each video, which represents the correlation between the annotations and varies from -1 (total disagreement) to 1 (total agreement).

Arousal/Valence annotation from one of our videos.

We release the dataset with the gold standar for arousal and valence as well the invidivual annotations for each reviewer, which can help the development of different models. We will calculate the final CCC against the goldstandard for each video. We also distribute the transcripts of what was spoken in each of the videos, as the contextual information is important to determine gradual emotional change through the utterances. The participants are encouraged to use crossmodal information in their models, as the videos were labeled by humans without distinction of any modality.

We also will let available to the participant teams a set of scripts which will help them to pre-process the dataset and evaluate their model during in the training phase.

Distribution and License

This corpus is distributed under the Creative Commons CC BY-NC-SA 3.0 DE license. If you use this corpus, you have to agree with the following itens:

- To cite our reference in any of your papers that make any use of the database.

- To use the corpus for research purpose only.

- To not provide the corpus to any second parties.

Access to the dataset: https://github.com/knowledgetechnologyuhh/OMGEmotionChallenge

References

Barros, P., Churamani, N., Lakomkin, E., Siqueira, H., Sutherland, A., & Wermter, S. (2018). The OMG-Emotion Behavior Dataset. arXiv preprint arXiv:1803.05434.