Cross-modal Interaction in Natural and Artificial Cognitive Systems (CINACS)

General Information |

|

| Our research concept focuses on the topics of cross-modal interactions

and integration. A unique and novel feature of the project is the integration of biological and engineering/robotics approaches, allowing for the creation of complementary knowledge in those fields. We also expect the exploitation of the insights obtained for potential clinical and technical applications.

Research in the Knowledge Technology Group is inspired by principles found in natural systems (biology, cognition, and neurosciences). In particular there is a common interest in the foundations and applications of intelligent systems. The topics addressed in the research agenda include multisensory perception, representation and attention, cross-modal learning, association and problem-solving, and multimodal communication. |

|

| Leading Investigator: | Prof. Dr. S. Wermter, Dr. C. Weber |

| Associates: | J. Bauer, J. D. Chacón, J. Kleesiek |

|

|

|

Multi-Modal Orientation Alignment for Action Selection Based on Superior Colliculus - Johannes Bauer

|

The superior colliculus (sc) is a region in the brain that is widely recognized to play a vital role in integrating different kinds of sensory information spacially, and generating motor responses, particularly orienting eye movements. An especially interesting aspect of the superior colliculus is its ability to enhance weak or noisy input to the individual sensory modalities by combining them, and suppressing one input in favor of another depending on its quality and modality in case inputs disagree. |

| In this project, we are going to build and evaluate neural network models for

the processes in the sc, paying special attention to enhancement and

depression. Our goal in this is to make aligning multi-sensory information and

action selection in robots more robust and thus help them navigate in noisy

environments.

In this early stage of our project, we are collecting what is known about the workings of enhancement and depression to create a uniform picture of the state of the art. We are also selecting likely candidates for neural network types for our model. |

|

|

|

Sound source localisation for assisting object identification and robot orientation - Jorge Dávila Chacón

|

Humans and other animals show a remarkable ability for the visual identification of objects, and to localize sound sources using the disparities in the sound waves received by the ears. At the same time, they integrate the sound sources and visual objects into a coherent representation of the environment. Can object identification based on hearing be used for on-line control of robot orientation and navigation? In particular, it is desired the robust localization and tracking of an acoustic source of interest, in acoustically cluttered environments, in order to support visual object identification for a mobile service robot. The project aims to build a computational neural model based on the midbrain for guiding robot actions. |

| The midbrain architecture of the inferior colliculus will be taken as a biomimetic model for sound localization, tracking and separation in auditory cluttered environments. The anticipated initial model will be based on a hybrid architecture. First, using cross-correlation and recurrent neural networks, it is devised the development of a robotic model accurate and robust enough to perform within an acoustically cluttered environment. At a second stage, it is considered the development of a spiking neural network architecture based of the mammalian subcortical auditory pathway, to achieve binaural sound source localisation. A humanoid Nao robot will be used for robotic experiments. |

|

|

|

Action-Driven Perception - Neural Architectures for Sensorimotor Laws - Jens Kleesiek

|

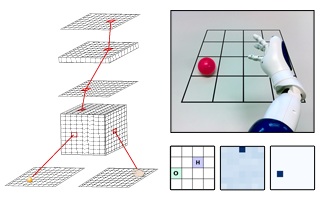

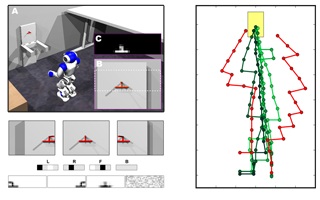

Actions are fundamental for perception and help to distinguish the qualities of sensory experiences in different sensory modalities (e.g. 'seeing' or 'touching'). The intimate relation between action, perception and cognition has been emphasized in philosophy and cognitive science for a long time, not only by putting forward an enactive approach that suggests that cognitive behavior results from interaction of organisms with their environment [Varela 1991]. However, most of the current robotic approaches do not rely on active perception. A robot is embodied and it has the ability to act and to perceive. Its action-triggered sensations are guided by the physical properties of the body, the world and the interplay of both. This concept is exploited to solve various (robotic) tasks such as reaching towards an object (figure on the left) and visually guided navigation (figure below). |

| For successful grasping two steps are necessary. First, the position of the target has to be identified and it has to be known in which relation this position is with respect to the hand. To learn the relation of object and hand as well as the movement of the hand towards the object, a novel two-layer neural architecture combining unsupervised learning of Sigma-Pi neurons and reinforcement learning (RL) has been developed [Kleesiek 2010]. In RL the prediction error between estimates of neighboring state values is determined and in turn used to modulate learning of action weights that encode both, value function and action strategy. We show that this prediction error can be used at the same time to adapt the weights of the Sigma-Pi neurons, leadingto sensorimotor laws required for successful reaching. |  |

| A similar concept can also be exploited for the autonomous visual navigation of a humanoid robot [Kleesiek 2011]. The robot learns sensorimotor laws and visual features simultaneously and exploits both for navigation towards a docking position. The control laws are trained using a two-layer network consisting of a feature (sensory) layer that feeds into an action layer. Again, the RL prediction error modulates not only the action but at the same time the feature weights [Weber 2009]. Under this influence, the network learns interpretable visual features and assigns goal-directed actions. | |

[Varela 1991] Varela, F.J., Thompson, E., Rosch, E. The Embodied Mind: Cognitive Science and Human Experience. Cambridge, Mass., MIT Press, 1991. [Weber 2009] C. Weber and J. Triesch, Goal-Directed Feature Learning. Proceedings of the 2009 International Joint Conference on Neural Networks (IJCNN'09). Piscataway, NJ, USA: IEEE Press, pp. 3355-3362, 2009. | |

|

|

|

Project Publications

|

|

|

Further Information |

|

| Project Website: | http://www.cinacs.org/ |

| The CINACS International Research Training Group is funded by the DFG (IGK 1247). It is a joint project of the University of Hamburg and Tsinghua University of Beijing, provides outstanding and internationally competitive research and PhD training. |

|