The ability to efficiently process crossmodal information is a key feature of the human brain that provides a robust perceptual experience and behavioural responses.

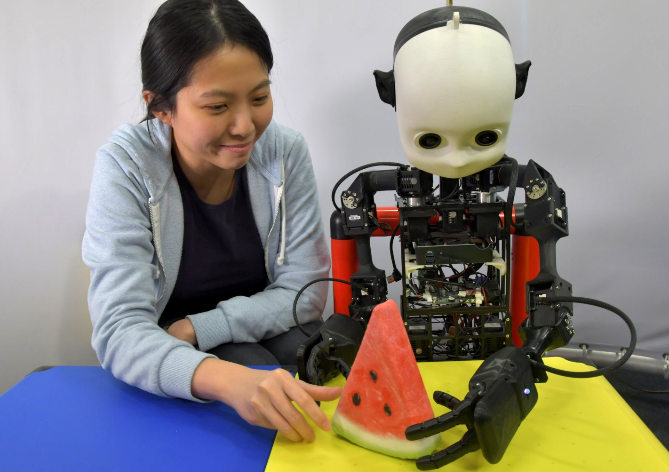

Consequently, the processing and integration of multisensory information streams such as vision, audio, haptics and proprioception play a crucial role in the development of autonomous agents and cognitive robots, yielding an efficient interaction with the environment also under conditions of sensory uncertainty.

Multisensory representations have been shown to improve performance in the research areas of human-robot interaction and sensory-driven motor behaviour. The perception, integration, and segregation of multisensory cues improve the capability to physically interact with objects and persons with higher levels of autonomy. However, multisensory input must be represented and integrated in an appropriate way so that they result in a reliable perceptual experience aimed to trigger adequate behavioural responses. The interplay of multisensory representations can be used to solve stimulus-driven conflicts for executive control. Embodied agents can develop complex sensorimotor behaviour through the interaction with a crossmodal environment, leading to the development and evaluation of scenarios that better reflect the challenges faced by operating robots in the real world. Such challenges include the modeling of lifelong learning, curriculum and developmental learning, and the autonomous exploration of the environment driven by intrinsic motivation and self-supervision. For this reason, the modeling of crossmodal processing in robots is of crucial interest for learning, memory, cognition, and behaviour, and particularly in the case of uncertain and ambiguous or incongruent multisensory input.

This half-day workshop focuses on presenting and discussing new findings, theories, systems, and trends in crossmodal learning applied to neurocognitive robotics. The workshop will feature a list of invited speakers with outstanding expertise in crossmodal learning.

Topics of interest

Important dates: Paper submission deadline: August 29 2018 Notification of acceptance: September 10 2018 Camera-ready version: September 17 2018 Workshop: Friday 5 October 2018

Accepted papers will be presented during the workshop as spotlight talks and/or in a poster session.

Authors of selected papers from the workshop will be invited to submit extended versions of their manuscripts to a journal's special issue (to be arranged).

Multisensory representations have been shown to improve performance in the research areas of human-robot interaction and sensory-driven motor behaviour. The perception, integration, and segregation of multisensory cues improve the capability to physically interact with objects and persons with higher levels of autonomy. However, multisensory input must be represented and integrated in an appropriate way so that they result in a reliable perceptual experience aimed to trigger adequate behavioural responses. The interplay of multisensory representations can be used to solve stimulus-driven conflicts for executive control. Embodied agents can develop complex sensorimotor behaviour through the interaction with a crossmodal environment, leading to the development and evaluation of scenarios that better reflect the challenges faced by operating robots in the real world. Such challenges include the modeling of lifelong learning, curriculum and developmental learning, and the autonomous exploration of the environment driven by intrinsic motivation and self-supervision. For this reason, the modeling of crossmodal processing in robots is of crucial interest for learning, memory, cognition, and behaviour, and particularly in the case of uncertain and ambiguous or incongruent multisensory input.

This half-day workshop focuses on presenting and discussing new findings, theories, systems, and trends in crossmodal learning applied to neurocognitive robotics. The workshop will feature a list of invited speakers with outstanding expertise in crossmodal learning.

Topics of interest

- New methods and applications for crossmodal processing

(e.g., integrating vision, audio, haptics, proprioception) - Machine learning and neural networks for multisensory robot perception

- Computational models of crossmodal attention and perception

- Bio-inspired approaches for crossmodal learning

- Crossmodal conflict resolution and executive control

- Sensorimotor learning for autonomous agents and robots

- Crossmodal learning for embodied and cognitive robots

Important dates: Paper submission deadline: August 29 2018 Notification of acceptance: September 10 2018 Camera-ready version: September 17 2018 Workshop: Friday 5 October 2018

Accepted papers will be presented during the workshop as spotlight talks and/or in a poster session.

Authors of selected papers from the workshop will be invited to submit extended versions of their manuscripts to a journal's special issue (to be arranged).